Brains & Bots: A Disciplined Loop for Better Strategy & OKRs

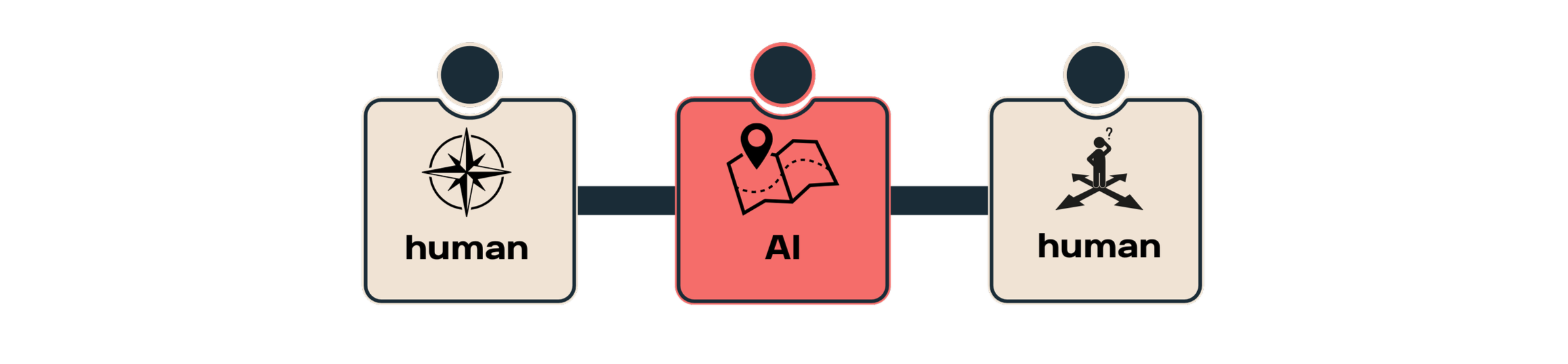

human → AI → human: the simple loop

AI is everywhere, but impact is a bit uneven. Teams are moving fast, then quietly paying the workslop tax—cleaning up low-quality AI outputs. I’m not anti-AI, I love AI - I can create cute little images like the one above in seconds. I just think we need to apply a bit more thinking to how we adopt it.

I’ve worked with OKRs for about a decade across 15+ organisations, from enterprise portfolios to individual teams, and I’ve been experimenting with AI at every layer to see where it genuinely helps. With current AI capability, the most reliable path is human-set outcomes and trade-offs, with AI as a second-pass assist. You keep judgment. AI accelerates clarity.

TL;DR

Outcomes before prompts. Set the destination in plain English before AI use

Human → AI → Human. Humans set intent and measures; AI helps explore and sharpen; humans choose.

Team-controllable signals. Prioritise measures your team can actually move.

Simple rules, visible rhythm. Short cycles, clear stop rules, and a cadence everyone can see.

(But I’d love for you to keep reading 😊)

Why strategy and OKRs feel hard right now

It’s not the tools. It’s the mix of pace, pressure, and ambiguity. Markets shift, people wear multiple hats, and attention is thin. Strategy should narrow choices, but too often it becomes a tidy deck that adds to the noise, gets filed away, and everyone keeps doing what they’ve always done. The pressure is to deliver, not to deliver outcomes. OKRs promise focus and learning, yet when rushed, they read like wishlists or reporting frameworks. The result: busywork, unclear trade-offs, and teams guessing what matters week to week.

Good strategy and OKRs force the hard thinking up front: what we’re really trying to achieve, where we can win, what’s unique about us, and what we’ll not do. When teams pause to set clear outcomes and signals, they run faster later—and engagement climbs. Skipping this step to “keep momentum” almost always slows you down a month later. These are the muscles to keep flexing: clarity, choice-making, and evidence-led course-correction.

The trap I’m seeing

Because everyone’s busy, I’m seeing centralised OKR creation to “speed things up”; AI drafting to “get something down quickly.” We lean on AI to make things sound right and mistake clean language for good decisions. The failure pattern is subtle: shiny wording, vague outcomes and measures no team can actually move. Momentum stalls.

Common scenarios:

“Let’s use AI so we don’t lose momentum.”

“It’s hard and I don’t have time to think.”

OKRs get retrofitted to work already under way

Reality: AI doesn’t know your capacity, constraints, politics, risk appetite, regulators, or your unwritten rules. That’s where real strategy lives. And when you do it well, creating strategy is actually fun.

If AI is steering your strategy, you’re taking a risk

When AI leads the goal-setting, it nudges you toward neat language, not better choices. The risks aren’t theoretical:

Direction risk: choosing the wrong goal or measures.

Rework risk: tidy documents today → weeks of fixing later.

People risk: pressure and frustration rise when clarity is missing.

Waste risk: all of the above = lost cycles, lost focus, and wasted money.

Still wondering, “Doesn’t AI save time so we can get back to the work?” Not quite. Adoption is racing ahead, but impact is uneven, and teams end up re-doing, explaining, or re-aligning. That’s time lost.

A quick governance check.

Some providers allow consumer chats to be used for training unless you opt out (check privacy settings). This doesn’t make your chats public, but it does mean they may be used to improve models unless you change the setting. Business/education/government plans and most API use are treated differently. Know your configuration. Particularly important when AI use is hidden and people BYOAI

Working with AI to save time (without losing judgment)

Speed is useful when you point it at the right moments. Keep humans focused on direction and trade-offs; let AI shorten the path between drafts and decisions.

“A woodcutter starts strong—20 trees a day. Weeks pass; output slips to 6. He works longer hours, creeps up to 8, then drops to 3. It wasn’t an effort issue. The axe was blunt. He takes a day to sharpen and goes back to 20—in less time. Strategy is the sharpening. AI speeds the chopping, but you still choose the angle, the tree and when to stop.”

AI at work recap:

Adoption ≠ impact. Plenty of organisations “use AI”; the lift at organisation level is patchy. Individual time-saves are nice, but they don’t replace strategy work.

Workslop is real. Teams report a steady stream of low-effort AI content that takes hours to fix. That “saved time” didn’t vanish—it moved to someone else. (Two hours of clean-up versus minutes saved is not a good trade.)

Stalled pilots. Many AI pilots don’t scale due to data quality, risk and unclear value. Strategy and OKRs are especially sensitive because they rely on context, trade-offs and trust.

Perception penalty. When colleagues think work used AI, they often rate the author’s competence lower, even on identical output. If you use AI, use it well and be transparent.

Where it backfires vs where it helps

Backfires when:

We jump to solutions before outcomes (AI tidies whatever you feed it).

We apply one-size templates (every organisation/team has nuance).

Ownership drops because teams didn’t co-create (OKRs get filed away).

Helps when:

Tightening language after you’ve named the outcome.

Comparing options against the signals you care about.

Surfacing risks & assumptions you’ve half-named.

Drafting tiny experiments and first-pass comms you’ll still review.

A simple way to work

Set the compass (human). Let AI draft the map. You choose the path.

Human: clarify intent, outcomes, and team-controllable signals.

AI: expand options, tighten wording, surface edge cases.

Human: choose trade-offs, pick the few measures you can move, set stop rules.R

Result: strategy stays focused and human; AI helps it travel faster.

How this looks in practice

Human — Write the objective (properly).

Describe the ideal state if we nail it. Note wrong-track signals. Call constraints (capacity, risk, regs). Be honest about the space you’ll own.

AI — Help with the thinking work.

Polish the objective so it’s easy to remember (an objective that is long and confusing helps no one). Offer distinct moves (not variants). Suggest draft signals (lead + lag) and stress-tests (what would prove us wrong? what might this break?). Sense-check: if we hit this, would we actually own X?

Human — Choose and commit.

Pick a direction. Set trade-offs and stop rules. Write a three-line decision note (choice, why, when we’ll revisit). Then move.

KRs (quick pass).

Brainstorm what you’d see (behaviours, leading indicators, anti-signals). Trim to team-controllable measures you can track weekly; add source/baseline/target. Ask AI to challenge them: which could mislead; what’s a better substitute. You do the final check.

Practical and easy guardrails

Outcomes first. If you can’t write the outcome + wrong-track signals in plain English, don’t prompt yet.

Team-controllable measures. Prefer lead signals you can move weekly; add source, baseline, target.

Won’t-do + stop rules. Decide what you’ll pause/stop—and the trigger—up front.

Decision rhythm. Weekly signals, fortnightly decisions, quarterly objectives.

Transparency. Tiny footnote on slides/docs: “AI used? Y/N — where?”

Govern shadow AI. Approve a minimal toolset, teach safe prompts, set data rules. It’s common—govern it.

“Smarter prompts aren’t a shortcut to strategy. They’re a shortcut to cleaner copy”

Governance note

Keep it safe, simple and inside your lines. Use approved tools, store drafts where they belong, never paste sensitive data, and keep a human in the driver seat for choices that carry risk

A few practical steps

If you want the benefits without the waste: create space, use a simple process, practise together, and embed it into how you work.

Leaders create space. Don’t cram OKR creation into a 60-minute slot once a year. Dedicate quality time.

Keep the process simple. Use the Human → AI → Human loop above.

Practise. The more you do it, the faster and sharper it gets.

Get team buy-in. Co-create; avoid the centralised “OKR writing office”.

Close the loop. Measure weekly, decide fortnightly, set and review objectives quarterly.

Final thoughts

No amount of AI should replace your strategic work. My number-one move is still to pause, realign and do the upfront thinking. AI can help you identify signals and speed the hard parts—synthesis, options and wording—while you keep the judgment, trade-offs and accountability.

Want help? Book a 60-min intro and we’ll review quality, adoption and engagement. I’ll then map what success looks like, and set up a simple way to create more value, fast.

references

McKinsey — State of AI (2024/25 survey hub) – adoption levels & org impact:

https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-aiMicrosoft WorkLab — Copilot early users – average ~14 minutes/day saved:

https://worklab.microsoft.com/en-us/insights/copilot-early-usersHBR — “AI-Generated ‘Workslop’ Is Destroying Productivity” – downstream clean-up burden:

https://hbr.org/2025/09/ai-generated-workslop-is-destroying-productivityHBR — “The Hidden Penalty of Using AI at Work” – perceived competence hit:

https://hbr.org/2025/08/research-the-hidden-penalty-of-using-ai-at-workTechMonitor (citing Gartner) — ~30% of gen-AI projects abandoned post-POC by 2025:

https://www.techmonitor.ai/digital-economy/ai-and-automation/gartner-forecasts-30-abandonment-rate-for-generative-ai-projects-by-2025KPMG — “Shadow AI is already here: take control…” (PDF) – practical governance controls:

https://kpmg.com/kpmg-us/content/dam/kpmg/pdf/2025/shadow-ai-already-here-take-control-reduce-risk-unleash-innovation.pdf